UX Design and Conversion Rate Optimization: How to master this dynamic duo

Published

No matter what product you’ve helped build, great UX design and conversion rate optimization (conversion rate optimization) play equally important roles in its overall success and, subsequently, the business you work for.

After spending the last year helping with growth and retention for a well-known SaaS corp where my job has been to increase conversions on many screens within their large app, I’ve realized just how paramount this conversion rate optimization work can be. Throughout our work, we scored wins in the 6 to 7 figure ARR.

I’ve gained a lot of insight in this area only to realize that it’s a skill that can help designers have an outsized impact. That’s why I want to encourage other designers to take a deep dive into mastering conversion rate work to support their team in improving the performance of their products and equip them with the process to do so. I promise you, when brought together, UX and conversion rate optimization design work can make quite the revenue-generating pair.

How is conversion rate optimization different from normal UX design work?

Conversion rate work is one of the rare instances where you are not doing any blue sky ideation but instead starting from a place where something already exists. This means you not only have qualitative data gathered from users but likely have quantitative data gathered through analytics to help you figure out your new solutions. This works in your favour as you can easily see what's not working in the existing flow, form a hypothesis around why it might be missing the mark, and experiment accordingly. For example, you can enter into ideation knowing there is an 18% drop-off when users arrive at your pricing screen, allowing you to focus your solution on this area of the design.

That’s cool. But what’s in it for me?

The answer is probably one of my favourite things about conversation rate work.

You’ll see that your team is super engaged around conversion rate work because the results are immediately measurable. Suggestions from different areas of expertise can be welcomed, leading to more potentially winning ideas.

Your impact is directly measurable which often isn’t the case when it comes to UX design. And it’s just plain satisfying to see your work have a meaningful impact. By designing a successful optimization, you can show the return of investment of your work, allowing you to land more projects like this or it can back you up when you’re negotiating that well-deserved raise.

Your impact is directly measurable which often isn’t the case when it comes to UX design.

On top of that, you can approach the problem in the same way you tackle any other design problem: Understand the problem, frame the opportunity, design a possible solution, evaluate your design. So the learning curve for conversion rate optimization work is relatively small.

So with that, let’s dive into how to get it done. Here is my process for optimizing conversion flows through UX Design practices.

Step one: Dig in and understand the problem

Assuming this is an existing business with customers, you along with other stakeholders at your company should start by looking at a flow and forming a hypothesis around why the conversion rate is low.

For example, let’s look at a standard e-commerce checkout flow, like Amazon. Your hypothesis might sound like this:

"It's hard for users to understand what their total cost is going to be because the taxes aren't shown" or “It’s hard for users to enter their credit card because of a bug".

Coming up with your hypothesis doesn’t need to take long but it shouldn’t be done in a silo.

Get your team together and ask each person to examine the existing flow and come up with their own ideas as to why it might be leading to a low rate of conversions. Next, narrow down all of the ideas until you have one that everyone agrees is the strongest hypothesis.

If you've done that and the problem is still not completely obvious, I suggest showing the flow to some users by doing moderated or unmoderated usability tests. By experiencing the issue directly through your user’s eyes and seeing them struggle through an attempted conversion, you will likely come away with a lot more ideas on how to improve the flow. This step will either solidify your team’s initial assumptions as to what’s broken or expose you to a fresh set of ideas as to how to optimize the flow.

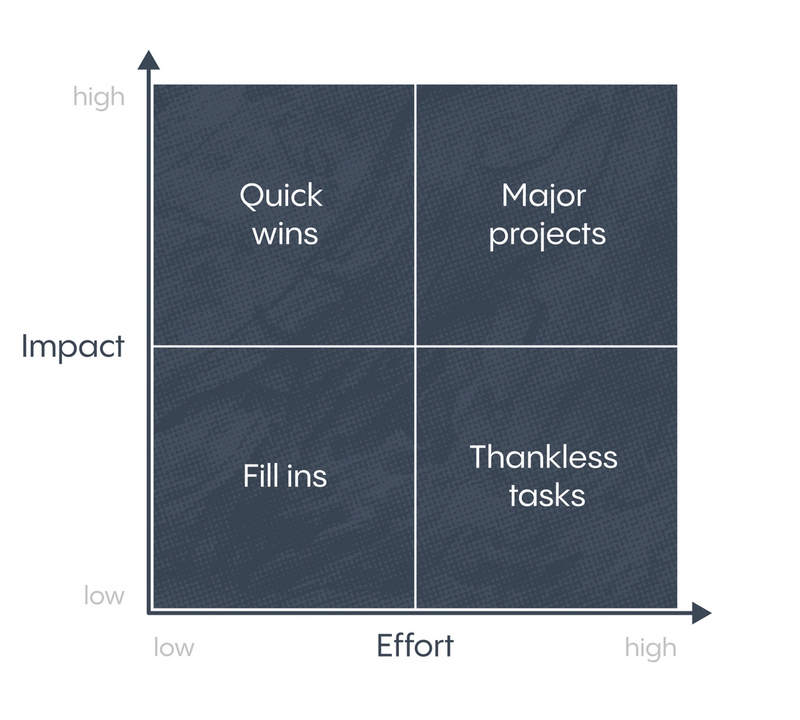

If you end up with a few potential hypotheses, your final step is to use an Impact vs. Effort Matrix to determine which ideas are worth moving forward with.

If the solution has a potential for high impact (like generating millions of dollars or adding customer delight) it will likely be worth a large effort. However, if the solution requires a high amount of effort but is not going to necessarily create a major impact, it’s probably not the right solution.

Pro tip 1: If you can’t get everyone aligned on one experiment, then agree to frame out multiple experiments. Conversion rate optimization is a game with multiple at bat where you need a mix of big swings and small ones.

Step two: Build your foundation

Next up, you should capture your problem statement, your hypothesis around how to improve it, and any analytical insights (from data, or from usability testing) in one place. Ultimately, what you’re creating here is a testing plan that you can refer back to during design and when you present your solutions.

In other words, a design brief.

Everyone involved in the project should agree with what is wrong and have an agreement on what direction needs to be taken to correct the problem. Your design brief will outline this as a problem statement, but there should be no specifics around the solutions that will be built at this stage.

You will also want to write out success statements that provide a concise way to communicate your team’s vision for the ultimate project win and get everyone aligned with what the desired outcome should be.

I suggest consulting your team for their version of what success looks like by having them finish the statement: “success looks like…”.

This could be:

- “Success looks like making it easier for users to see their tax information so that they are ready to convert”

- “Success looks like a 5% increase on this page which would lead to a $1000 increase in revenue a week”

You’ll want to write out a set of statements that define the method in which you are going to design the solution, otherwise known as design principles. This isn’t to say we are defining a solution here, rather the approaches and principles that you are going to abide by. Add your hypothesis and insights gathered from usability testing and you’ll have a road map to ensure you are in complete alignment with what needs to be built.

Step three: The Fun Part

Now it’s time to design. Head into Figma and create a solution using your design brief as your guide. At this point, I have no doubt you will be overflowing with ideas and well on your way to making something awesome. Once you’ve completed your design, present it to the stakeholders who helped you define the problem (step one) so you can get feedback. You want to make sure that your solution is meeting everyone's agreed-upon vision of success laid out in the original brief. Be sure to update your design brief with more detail as the project progresses. This ensures everyone remains aligned when it comes time to evaluate potential solutions and the results of the experiment.

When you’re designing, it’s important to remember that if the solution you come up with involves many changes to the flow or screen, you will have a hard time determining which change successfully increased conversions. However, if you only implement 1 or 2 small changes (like moving a button just 10px higher), while easy to measure, they may not be impactful enough to move the needle and the conversion rate may stay the same.

The key is to choose between big impactful (but hard-to-measure) changes and tiny incremental (but easily measurable) changes. Each experiment will be different so you must take the time to evaluate each option and pick a point you are comfortable with. This can be tough because sometimes you think a small change will have a big impact so you opt for that direction which turns out to be too small and doesn't do anything. On the other hand, sometimes you make a big change that fails and then you have no idea why. Knowing your problem and what will be considered a success will help guide the scope of the solution, so keep that design brief on hand.

Pro tip 2: The big swings involve changing multiple variables making it tough to pinpoint what worked or didn’t. Small swings can help test very specific hypotheses but there can be problems with doing too much of them at once. Basically, we're trying to always balance getting the largest impact that is the most measurable, but they work against each other.

If you’re creating a complex flow or changing lots of things, it may be worth doing a round of usability testing to get an initial sense of if your proposed idea actually works. Testing at this stage is also an opportunity to check that the original intention of the page is not affected by your new design. If you decide to forgo this round of testing, you might spend a bunch of time and money creating another flow with an even worse conversion rate than the original or, worse, damage the original function of the page with all of your changes and have absolutely no idea. So, consider yourself warned.

Step four: Test and measure

As a designer, you likely won’t be responsible for taking the lead in this step but it is certainly helpful to understand it anyway. Synthesizing the results is often left in the hands of Data Analysts, Data Scientists or Product Managers but it is your job to understand and hypothesize on the results.

Once you’ve got an approved design, it should get built and A/B tested. A/B Testing is a method of comparing two versions of a solution against each other to determine which one performs better. Most analysis can be done using basic event tracking (mixpanel, flurry, etc ) and statistics. You can also use a calculator if you don’t have an in-house data scientist.

Depending on how many people use the app or flow in question, A/B tests might take months. This is because you need statistically significant results for the test to be meaningful. That means you need enough people tested within both test groups to ensure you produce worthwhile insights. You can learn more about the importance of statistical significance here.

So, if you have low traffic and don’t think you can get statistically significant results, usability testing might be the best data you can get. If you have plenty of users, your test will produce either a pass (conversion increase), fail (conversion decrease) or neutral result (no detectable change). Again, don’t be alarmed if A/B testing takes a while to produce significant data. Even large companies, who run these tests regularly, have limited control over how long it can take.

If your result is a fail, you and your team should discuss what went wrong and how you can go back to step one and try again. If the solution is a pass, it is worth its weight to discuss how you can build on what you learned and continue to improve the flow. And if the result is neutral, you should still look into if the user experience was improved. In which case, the new design may still have merit and should be shipped.

Next Steps: Learning as your primary goal

It’s not uncommon for there to be more failures than passes when you do this work. But don’t lose sight of how learning from failure can be as important as increasing revenue. Ultimately, what you learn from your failed tests will lead to revenue, eventually, because failed tests are often sources of big insights. There are a lot of opportunities to make new experiments based on the findings that come from your failures. Neutral results, on the other hand, are concerning because you learn very little but it still took up a lot of time. So if your results are neutral, it could signal that you need to try again and take bigger swings.

Even if you think you’ve designed a home run solution, sometimes it just doesn’t make it out of the park. And that’s ok. In those failures, you’re learning from experiments that bring in the insights you need to get closer to the winning solution. By sticking to your process, I promise you’ll generate wins a lot quicker. And remember: If that long-sought-after win generates a big boost in revenue, you only need one to make each failure worth it.

Nick Foster is the founder of Sixzero, an agency that helps companies design apps and software with measurable impact.

Illustration by: Muti, Folio Art